|

|

|

|

|

|

|

|

|

|

|

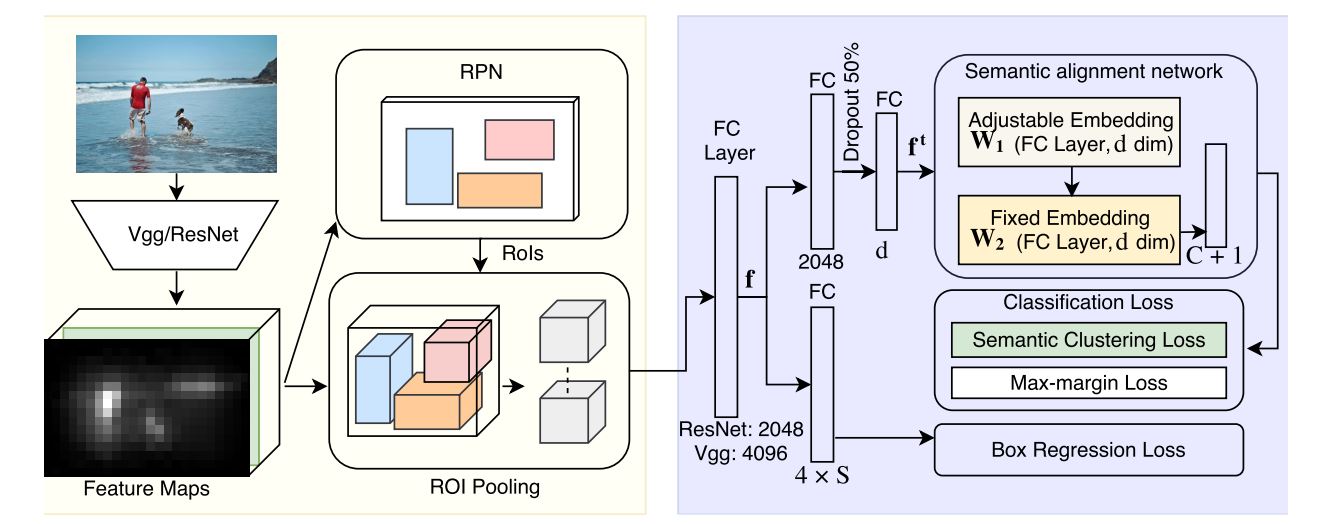

S. Rahman, S. H. Khan, F. Porikli. Zero-Shot Object Detection: Learning to Simultaneously Recognize and Localize Novel Concepts. Asian Conference on Computer Vision (ACCV), Perth, December 2018. [Link to Paper] |

|

S. Rahman, S. H. Khan, F. Porikli. Zero-Shot Object Detection: Joint Recognition and Localization of Novel Concepts. International Journal of Computer Vision (IJCV), Springer 2020. [Link to Paper] |

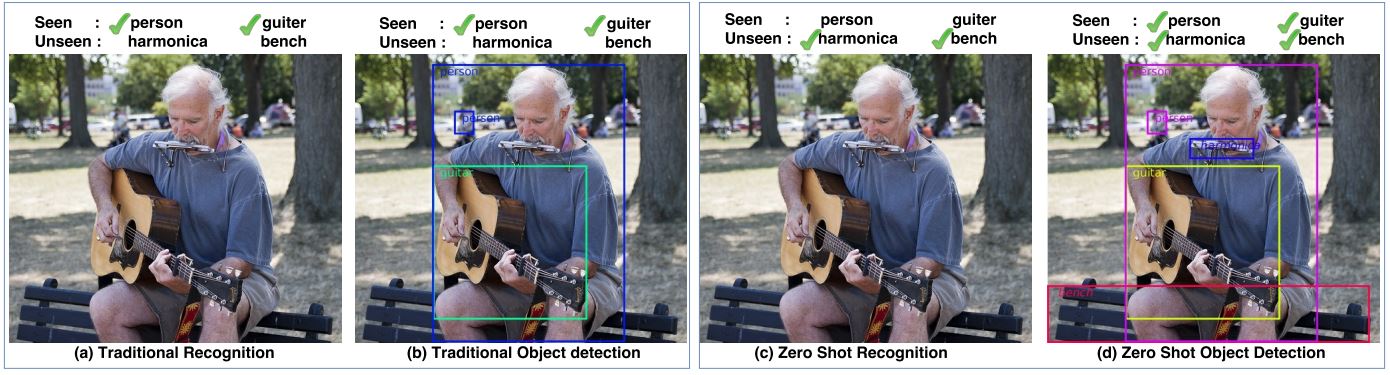

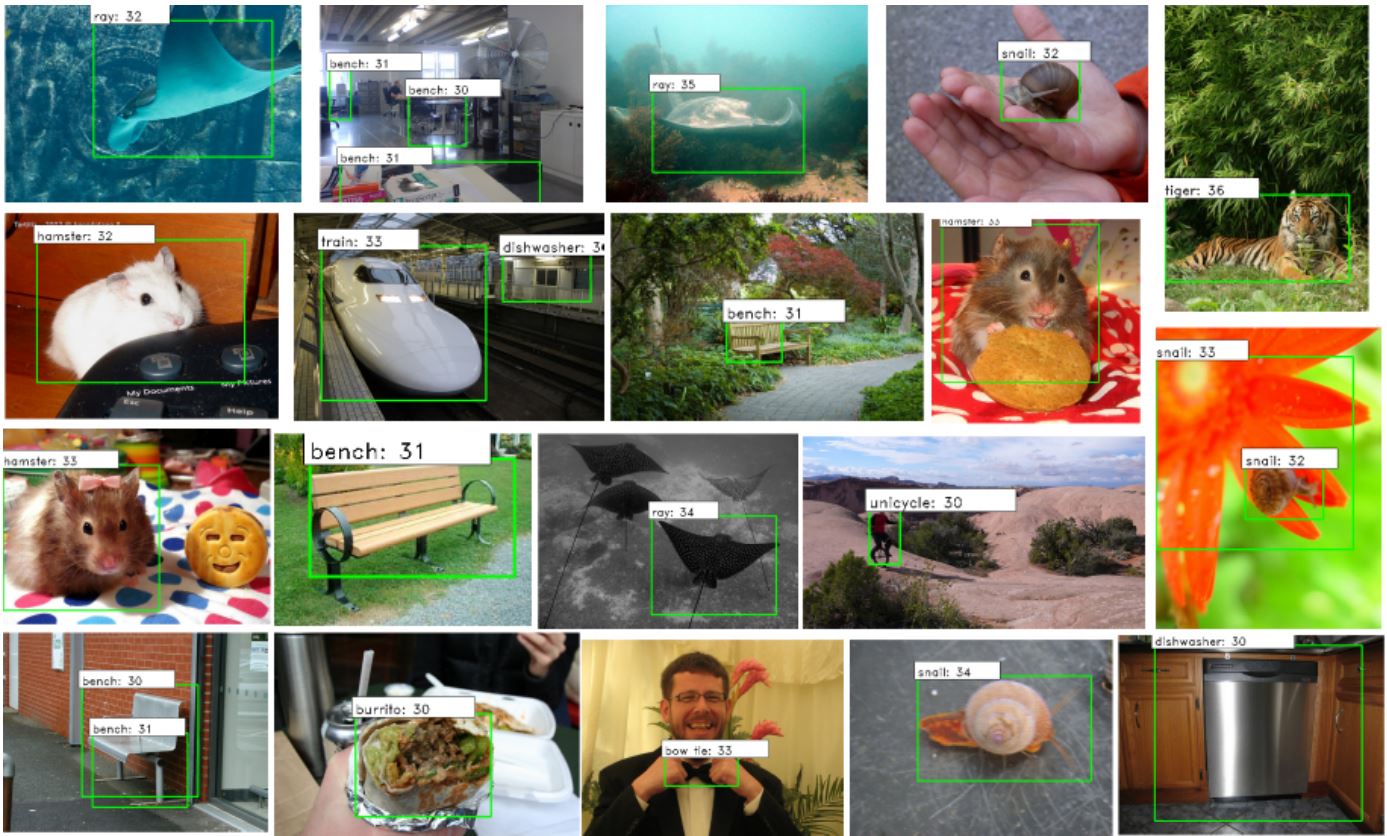

All of the detected classes were not seen by the model during training. |

CitationsS. Rahman, S. H. Khan and F. Porikli, “Zero-Shot Object Detection: Joint Recognition and Localization of Novel Concepts,” International Journal of Computer Vision (IJCV), Springer 2020. @article{rahman2018zeroshot, title={Zero-Shot Object Detection: Learning to Simultaneously Recognize and Localize Novel Concepts}, author={Rahman, Shafin and Khan, Salman and Porikli, Fatih}, journal={Asian Conference on Computer Vision (ACCV)}, publisher={LNCS, Springer}, year={2018} } @article{rahman2020zeroshot, title={Zero-Shot Object Detection: Joint Recognition and Localization of Novel Concepts}, author={Rahman, Shafin and Khan, Salman and Porikli, Fatih}, journal={International Journal of Computer Vision (IJCV)}, publisher={Springer}, year={2020} } |

Followup Work |

Ancknowledgement |